Last week marked the annual hacking and security conference SEC-T, which took place in Stockholm. The conference is a popular event in the security industry, with a focus on providing the audience with high-quality talks and in-depth Q&A sessions with speakers. Sectra’s security expert Leif Nixon was invited to speak at the conference to share a true story from his former job, when a number of European supercomputers were discovered to be mining Bitcoin. In this article, we will highlight the key takeaways of this discovery and the story around it. We will also dig a bit deeper into how difficult it can be to forge misleading evidence in an IT environment since you often leave traces that can be difficult to falsify.

The story starts at the Large Hadron Collider (LHC) at CERN, a 27-kilometer tunnel about 100 meters underground where particles are accelerated to near-light speed in both directions. These particles collide at four places in this tunnel, including Atlas (also known as “The Atlas Experiment”), which Leif was involved in.

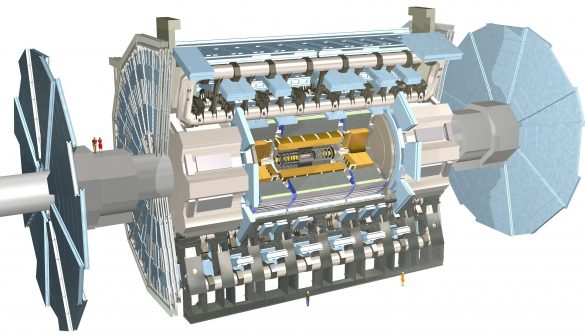

The Atlas detector itself is a big piece of machinery (see image below). The particles pass through this machine and the collisions take place in the middle. Layers and layers of sensors and detectors then try to figure out what happens when the particles collide.

The Atlas detector and the other three experiment sites produce lots of data, more than anyone can possibly imagine. It is too much data for CERN to handle itself, so it stores this data on “the Grid,” an international network of Tier 1 (T1) sites. T1 sites are major scientific centers with huge networks that send data around the world. One of the largest T1 sites on the Grid is the Nordic Data Grid Facility (NDGF). This is where Leif worked at the time. The site is a Scandinavian collaboration that handles data produced by the LHC.

Below the T1 centers in the Grid are T2 and T3 centers. All these centers and their systems are connected through a global infrastructure. In this case, one might ask a very important question—how do you access all these systems? The answer is simple—you have to enter the Grid. Actually doing so, however, is not so simple.

How to enter the Grid:

- Write a job description, including why you want to run a computation job on certain data from the LHC. You need to fill out all sorts of details about what the data is intended for and what is needed for the job.

- Send the document through a Workload Management System to be evaluated.

- Eventually the document is sent to a computation resource lab attached to the Grid. It could, for example, be sent to a supercomputer at NSC, a national supercomputer center in Linköping, Sweden, where Leif used to work.

The Grid consists of around one million computer cores spread across 170 sites in 42 countries. To put it mildly, security around these systems is rather important.

Each of these 170 sites has one or two security officers, and a subgroup of them forms an Incident Response Task Force (IRTF). The person responsible for this group is known as the Head of IRFT. At the time, this role was held by Leif.

We had lots and lots of security incidents, which is normal for infrastructure of this size. But none of these were actually related to the Grid and the global infrastructure, until January 2014.

January 2014

In January 2014, something very suspicious was discovered. Site admins at a T1 center in France discovered that a number of BLAST jobs—a software that analyzes protein structures—submitted by the user “Paul” were behaving strangely. They looked a bit closer at the behavior and performed a forensic analysis of the data. What they discovered was surprising—someone was using the software at a supercomputer to mine Bitcoin.

The IRTF started an investigation, which revealed that 35,025 individual computer jobs had been run over several different sites for the past few weeks, consuming around 100 CPU-years of processing power at a cost of about EUR 10,000.

“Paul,” who submitted the BLAST jobs, had his access to the Grid suspended and all sites were alerted to look for suspicious behavior. As Head of IRTF, Leif was appointed incident coordinator.

Almost immediately, the IRTF received a report from the local site where “Paul” had submitted the suspicious jobs. The computer that had sent the jobs was inspected by the local system administrator—who turned out to be “Paul” himself. His report showed that the computer was compromised and had various malware installed, indicating logins from China. “Paul” called the case closed.

Not so fast—how about the filesystem timeline?

Leif was not satisfied with this report. He wanted to perform a root cause analysis and have a look at the compromised machine himself—which he finally had the chance to do.

The first thing Leif started with was to look at the filesystem timeline, to see what had happened in this filesystem and when. The team also looked at the system logs to confirm that they were unbroken.

When analyzing the filesystem timeline, there are three different timestamps that need to be looked at:

- mtime – modification time: the last time the file contents were changed

- ctime – change time: the last time the file contents or inode changed

- atime – access time: the last time the file was read

The mtime and atime can easily be set to arbitrary values, but not the ctime.

The filesystem contained many thousands of files, each with multiple timestamps, so there was a lot of data to sort through to identify important events. Leif and his team went through the timeline, but did not find anything interesting—except malware that suddenly appeared out of thin air on December 4, 2013. But there was no explanation for it, and no more clues before the system was shut down on January 9, 2014.

Next step: analyzing the logs

Since nothing was found on the timeline, they went on to analyze the logs. But there was one important question to be answered first—could they trust the logs?

Leif Nixon: “Since the system seemed to have been compromised, we couldn’t trust anything on the system. But if the logs were intact, maybe we could at least detect tampering. If we can’t detect tampering, then maybe we can trust the logs. This is how you have to work with this type of data. There is always limited trust.

What you need to do is to try to build a narrative. You make all the observations of events and data that you can, and then you try to put it together and try to tell a story about the system that is consistent and seems reasonable. And then lastly try to explain how the system got into this state.”

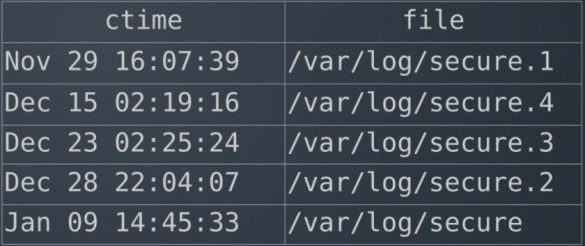

The team did this by rotating the logs, so every log file was renamed. This meant that the metadata was changed, thus updating the ctime. Therefore, every log file should have had the same ctime—the time of last rotation. The result looked like this:

The numbers did not make sense at all. It was not even close to being the same ctime for all the logs. The system had clearly been tampered with, but the result was so confusing that it was impossible to tell how it had been tampered with.

Even though the logs couldn’t be trusted, the team found two route logins from Chinese IP addresses. Could this be some kind of clue?

It turned out that these IP addresses were not actually routed on the Internet and had not been taken into use. In other words, it was impossible to connect to the Internet using these IP addresses in China. The team still had no usable clues as to what had happened.

What about deleted files?

The team was sure the system had been tampered with, but they did not know how. The next step was to restore deleted files and hope to find evidence there.

To restore a deleted file, the team needed to find an old copy of the metadata in its inode. But where would they find such a copy? If they were lucky, there might be an old copy still left in the filesystem journal.

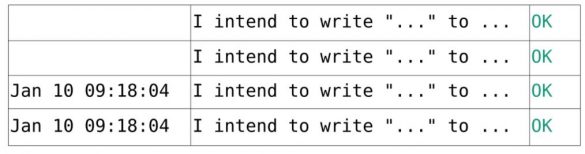

And yes, they found something interesting.

On this particular filesystem journal (pictured above), the format was changed in the middle of the journal and a new timestamp appeared. It was very strange that the timestamps suddenly started to appear—but almost even more suspicious was the date. The system was shut down on January 9, but there were still write operations taking place on January 10. How was this possible?

You could tell from the filesystem that the system had not been booted again, but still the filesystem was somehow mounted. What was going on here?

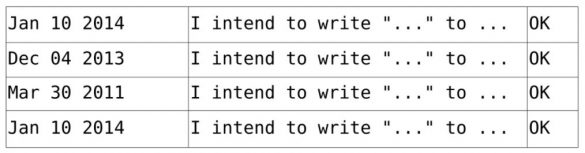

The picture above shows that the timestamps were not sequential—it looked like somebody was messing with time itself. All the dates in the journal corresponded to a particular piece of evidence on the host. The only plausible narrative that Leif, as the incident coordinator, could build that fit all the facts was:

Jan 8: Malicious jobs discovered

Jan 9: “Paul” shut down the machine

Jan 10: Somebody with physical access mounted the disk from another machine and started changing the system clock while implanting fake data

Was anyone charged with a crime?

All the evidence pointed towards “Paul.” But did he face any charges for using supercomputers for Bitcoin mining?

A three-person committee evaluated Leif’s report. They agreed on the technical evidence but could not positively point out any single responsible individual, even though “Paul” was the only person with physical access to the computer.

It is worth mentioning that the committee consisted of one IT security representative, one info security representative and one local representative—who ironically enough was “Paul.” Unfortunately, “Paul” was never charged with a crime.

So, what is the takeaway from this story?

Leif Nixon: “Firstly, don’t try to steal CPU time from supercomputers—because they are the only computers where somebody actually looks at and monitors the CPU usage. And secondly, faking complex digital evidence is really hard. It seems like it is just binary data on a disc that you can do whatever you want with, but constructing a fake narrative that fits together is almost impossible. Which gives us a hopeful point, because in this era where everyone talks about false flag operations—you can actually find something that is the truth.”